BLAS, BLASter, BLAStest: Some benchmark results, and a benchmarking framework

Usage of accelerated BLAS libraries seems to shrouded in some mystery, judging from somewhat regularly recurring requests for help on lists such as r-sig-hpc (gmane version), the R list dedicated to High-Performance Computing. Yet it doesn't have to be; installation can be really simple (on appropriate systems).Another issue that I felt needed addressing was a comparison between the different alternatives available, quite possibly including GPU computing. So a few weeks ago I sat down and wrote a small package to run, collect, analyse and visualize some benchmarks. That package, called gcbd (more about the name below) is now on CRAN as of this morning. The package both facilitates the data collection for the paper it also contains (in the vignette form common among R packages) and provides code to analyse the data---which is also included as a SQLite database. All this is done in the Debian and Ubuntu context by transparently installing and removing suitable packages providing BLAS implementations: that we can fully automate data collection over several competing implementations via a single script (which is also included). Contributions of benchmark results is encouraged---that is the idea of the package.

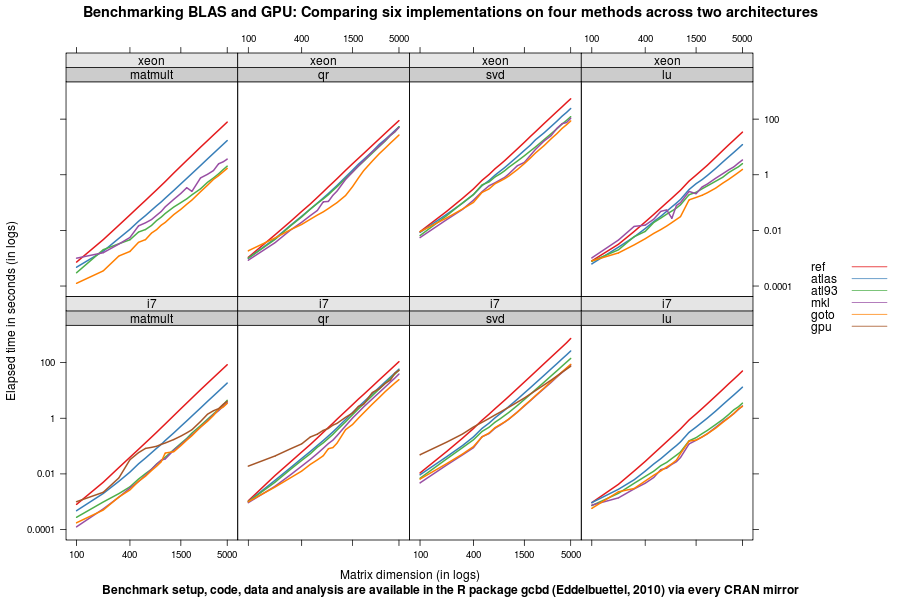

The paper itself describes the background and technical details before presenting the results. The benchmark compares the basic reference BLAS, Atlas (both single- and multithreaded), Goto, Intel MKL and a GPU-based approach. This blog post is not the place to recap all results, so please do see the paper for more details. But one summary chart regrouping the main results fits well here:

This chart, in a log/log form, shows how reference BLAS lags everything, how multithreaded newer Atlas improves over the standard Atlas package currently still the default in both distros, how the Intel MKL (available via Ubuntu) is fairly good but how Goto wins almost everything. GPU computing is compelling for really large sizes (at double precision) and too costly at small ones. It also illustrates variability and different computational cost across the methods tested: svd is more expensive than level-3 matrix multiplication, and the different implementations are less spread apart. More details are in the paper; code, data etc are in the package gcbd.

The larger context is to do something like this benchmarking exercise, but across distributions, operating systems and possibly also GPU cards. Mark and I started to talk about this during and after R/Finance earlier this year and have some ideas. Time permitting, that work should be happening in the GPU/CPU Benchmarks (gdb) project, and that's why this got called gcbd as a simpler GPU/CPU Benchmarks on Debian Systems study.