RcppFastFloat 0.0.1: New Package, Already on CRAN

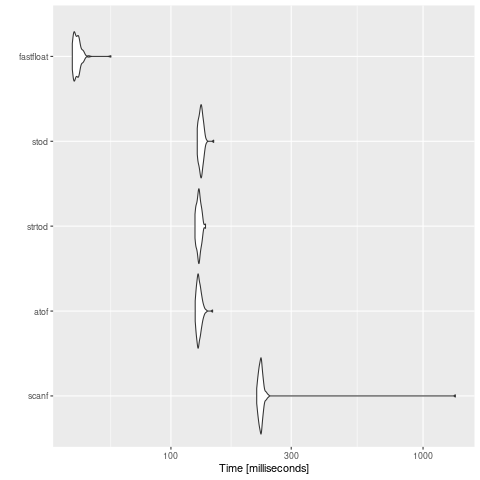

A new package, once again based on wonderful library by Daniel Lemire, is now on CRAN in its initial version 0.0.1. Daniel, in a recent arXiv paper shows that one can convert character representations of ‘numbers’ into floating point at rates at or exceeding one gigabyte per second. His tests show a fourfold gain over library functions such as strtod.

We put a simply package together showing use of the example parser, and containing a simple ‘all-in’ comparison benchmark (where we time the function call overhead as well) and get roughly 3x. See the repo for details; we are borring the table and figure here:

> source("comparison.R")

Unit: milliseconds

expr min lq mean median uq max neval cld

scanf 218.8936 224.1223 238.5650 227.1901 229.9116 1343.433 100 c

atof 124.8087 127.3274 129.4104 128.5858 130.9138 146.334 100 b

strtod 124.5705 127.2157 129.1238 129.1042 130.7504 137.143 100 b

stod 127.1751 129.7343 131.7339 131.4854 133.1425 147.763 100 b

fastfloat 40.6219 41.3042 42.5729 42.3209 43.1738 57.788 100 a

> Or in chart form:

Not much to say yet for the initial release:

Changes in version 0.0.1 (2021-01-31)

- Initial version and CRAN upload

While the package was waiting to be added to CRAN, Brendan already added a potential as.double() replacement which will be in the next version.

If you like this or other open-source work I do, you can now sponsor me at GitHub.

This post by Dirk Eddelbuettel originated on his Thinking inside the box blog. Please report excessive re-aggregation in third-party for-profit settings.