The

Comprehensive R Archive Network, or CRAN for short, has been a major driver in the success and

rapid proliferation of the

R statistical language and environment. CRAN currently hosts around 3400

packages, and is growing at a rapid rate. Not too long ago,

John Fox gave a keynote lecture

at the annual R conference and provided a lot of quantitative insight into R and CRAN---including an estimate of an incredible growth rate

of 40% as a near-perfect straight line on a log-log chart! So CRAN does in fact grow exponentially. (His talk morphed into

this paper in the R Journal, see figure 3 for this chart.)

The success of CRAN is due to a lot of hard work by the CRAN maintainers, lead for many years and still today by

Kurt Hornik whose dedication is unparalleled. Even at the current growth rate of several

packages a day, all submissions are still rigorously quality-controlled using strong testing features available in the R system.

And for all its successes, and without trying to sound ungrateful, there have always been some things missing at CRAN. It has always been

difficult to keep a handle on the rapidly growing archive. Task Views for particular fields, edited by volunteers with specific domain

knowledge (including yours truly) help somewhat, but still cannot keep up with the flow. What is missing are regular updates on packages. What

is also missing is a better review and voting system (and while Hadley Wickham mentored a Google Summer of Code

student to write CRANtastic, it seems fair to say that this subproject didn't exactly take off

either).

Following useR! 2007 in Ames, I decided to do something and noodled over a first design on the drive back to Chicago. A weekend of hacking

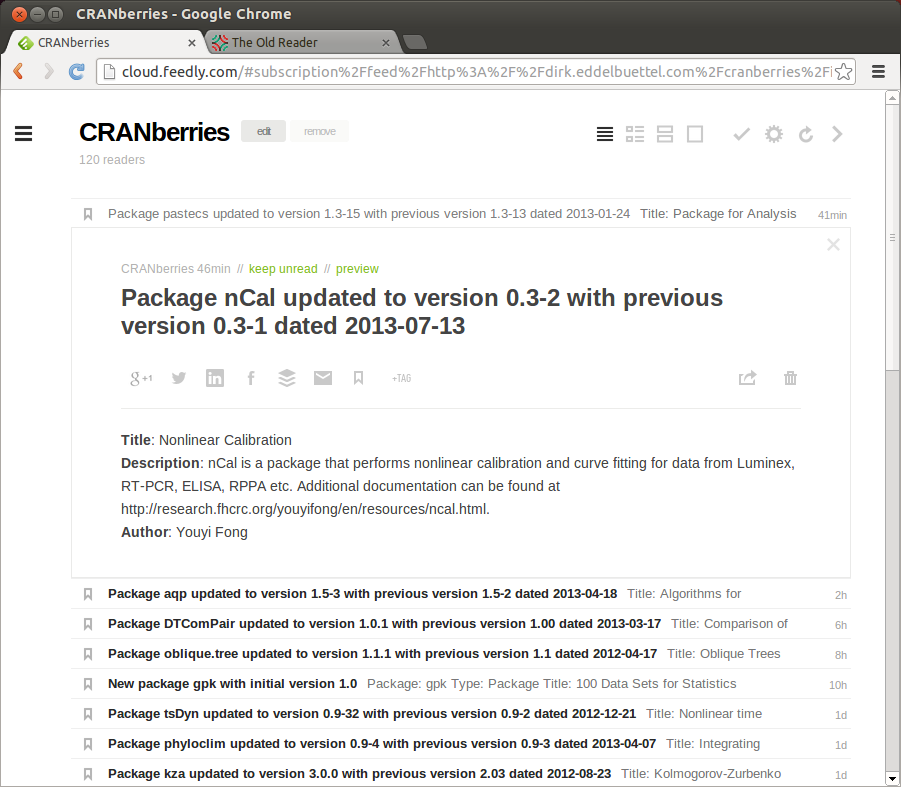

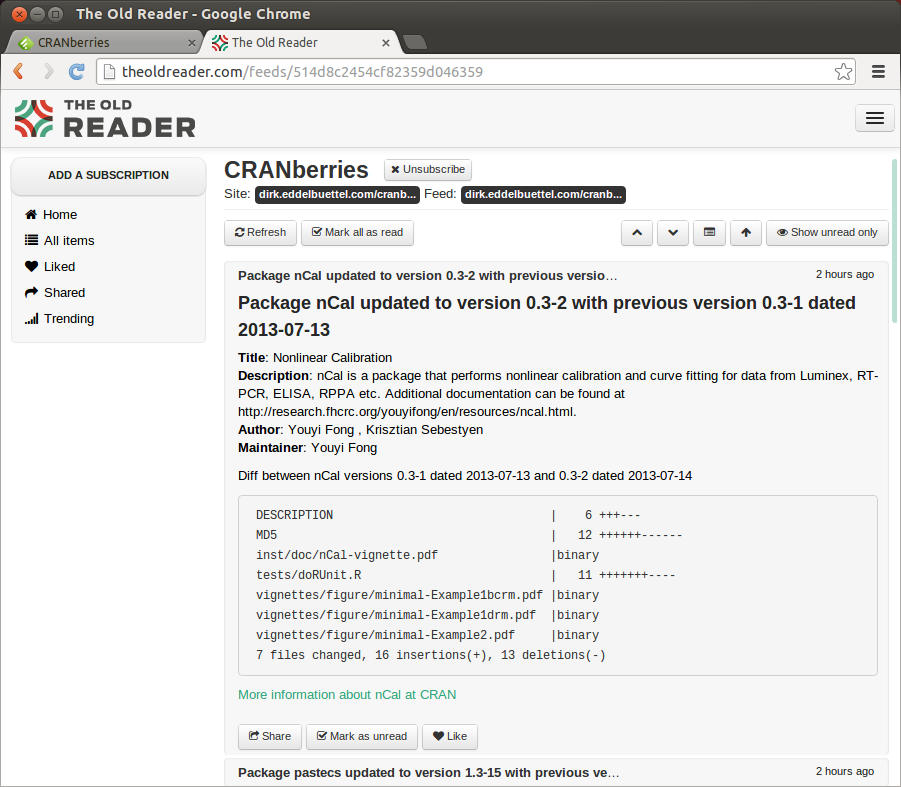

lead to CRANberries. CRANberries uses existing R functions to learn which packages

are available right now, and compares that to data stored in a local SQLite database. This is enough to learn two things: First, which

new packages were added since the last run. That is very useful information, and it feeds a website with blog

subscriptions (for the technically minded: an RSS feed, at this URL).

Second, it can also compare current versions numbers with the most recent stored

version number, and thereby learns about updated packages. This too is useful, and also feeds a website and RSS

stream (at this URL; there is also a combined one for new and updated packages.) CRANberries

writes out little summaries for both new packages (essentially copying what the DESCRIPTION file contains), and a quick

diffstat summary for updated packages. A static blog compiler munges this into static html pages which I serve from here, and

creates the RSS feed data at the same time.

All this has been operating since 2007. Google Reader tells me the the RSS feed averages around 137 posts per week, and has about 160 subscribers. It

does feed to Planet R which itself redistributes so it is hard to estimate the absolute number of

readers. My weblogs also indicate a steady number of visits to the html versions.

The most recent innovation was to add tweeting earlier in 2011 under the @CRANberriesFeed Twitter handle. After all, the best

way to address information overload and too many posts in our RSS readers surely is to ... just generate more information and add some Twitter

noise. So CRANberries now tweets a message for each new package, and a summary

message for each set of new packages (or several if the total length exceeds the 140 character limit). As of today, we have sent 1723

tweets to what are currently 171 subscribers. Tweets for updated packages were added a few months later.

Which leads us to today's innovation. One feature which has truly been missing from CRAN was updates about withdrawn packages. Packages

can be withdrawn for a number of reasons. Back in the day, CRAN carried so-called bundles carrying packages inside. Examples were VR and

gregmisc. Both had long been split into their component packages, making VR and gregmisc part of the set of packages no longer on the top

page of CRAN, but only its archive section. Other examples are packages such as Design, which its author Frank Harrell renamed to rms to match to title of the book covering its

methodology. And then there are of course package for which the maintainer disappeared, or lost interest, or was unable to keep up with

quality requirements imposed by CRAN. All these packages are of

course still in the Archive section of CRAN.

But how many packages did disappear? Well, compared to the information accumulated by

CRANberries over the years, as of today a staggering

282 packages have been withdrawn for various reasons. And at least I would like to know more regularly when this happens,

if only so I have a chance to see if the retired package is one the 120+

packages I still look after for Debian (as happened recently with two Rmetrics packages).

So starting with the next scheduled run, CRANberries

will also report removed packages, in its own subtree of the website and its own RSS feed (which should appear at

this URL). I made the required code changes (all of about two

dozen lines), and did some light testing. To not overwhelm us all with line noise while we catch up to the current steady state of packages, I

have (temporarily) lowered the frequency with which CRANberries is called by cron.

I also put a cap on the number of removed packages that are reported in each run. As always with new code, there may be a bug or two but I

will try to catch up in due course.

I hope this is of interest and use to others. If so, please use the RSS feeds in your RSS readers, and subscribe to the

@CRANberriesFeed, And keep using CRAN, and let's all say thanks to Kurt, Stefan, Uwe, and

everybody who is working on CRAN (or has been in the past).

/code/cranberries |

permanent link